Too many design leaders are still waiting.

Waiting for product to define the strategy.

Waiting for clarity before they weigh in.

Waiting for permission to lead.

But in an AI-first world, the waiting game is over.

We’re in the midst of a once-in-a-generation shift in how products are built, experienced, and evolve. Intelligence is no longer hidden behind the interface — it isthe interface. As systems become adaptive, multimodal, and context-aware, experience is no longer downstream from strategy. It is the strategy.

And yet, many design leaders remain stuck in reactive mode, executing with polish but hesitating to direct, waiting for alignment instead of driving clarity, and focused on flows when they should be framing futures.

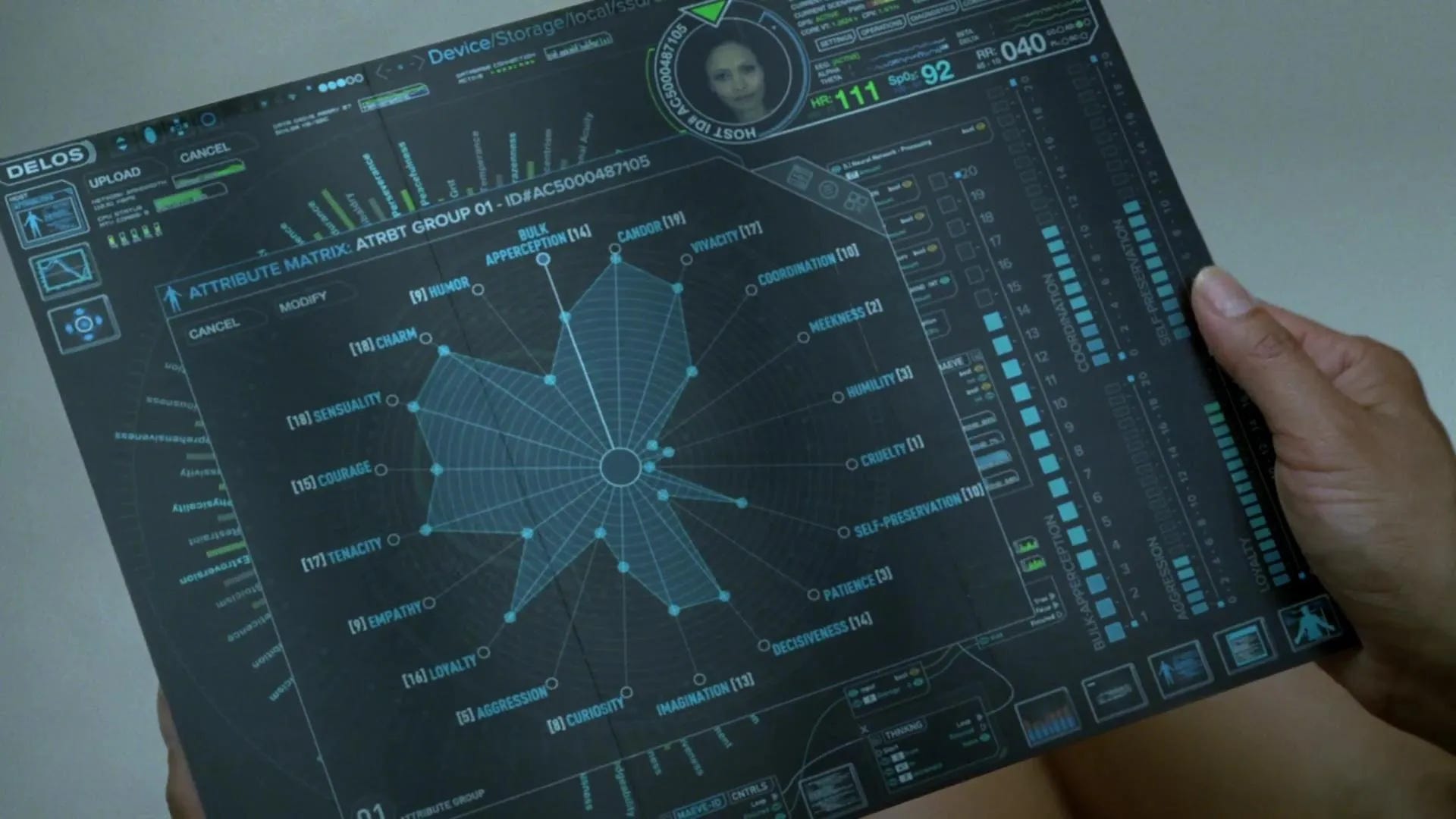

We’ve entered a new era of product development, one where systems learn in real time, interfaces adapt on the fly, and intelligence shapes every interaction. We’re not designing static screens for predictable use cases. We’re designing responsive, behavioral systems.

In this context, if you’re not shaping the system — its intelligence, timing, and tone — someone else is.

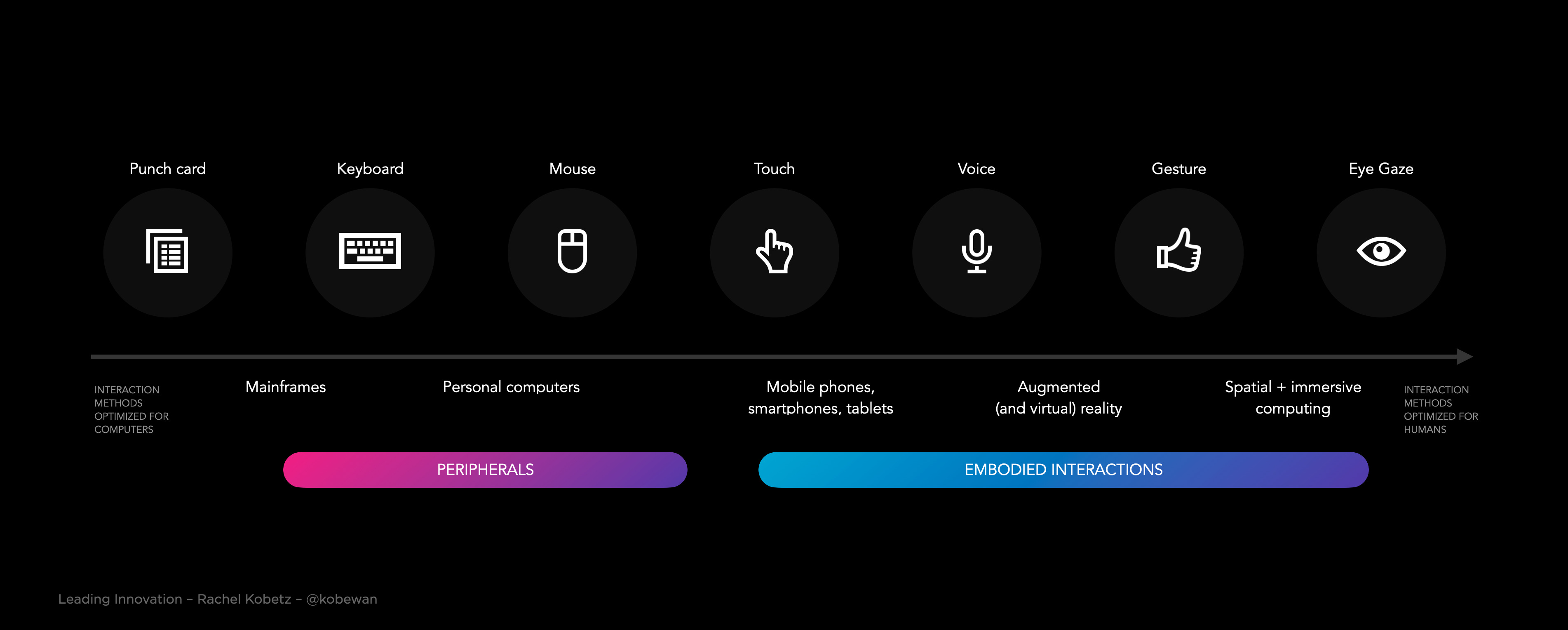

Intelligence Has Moved Into The Interface

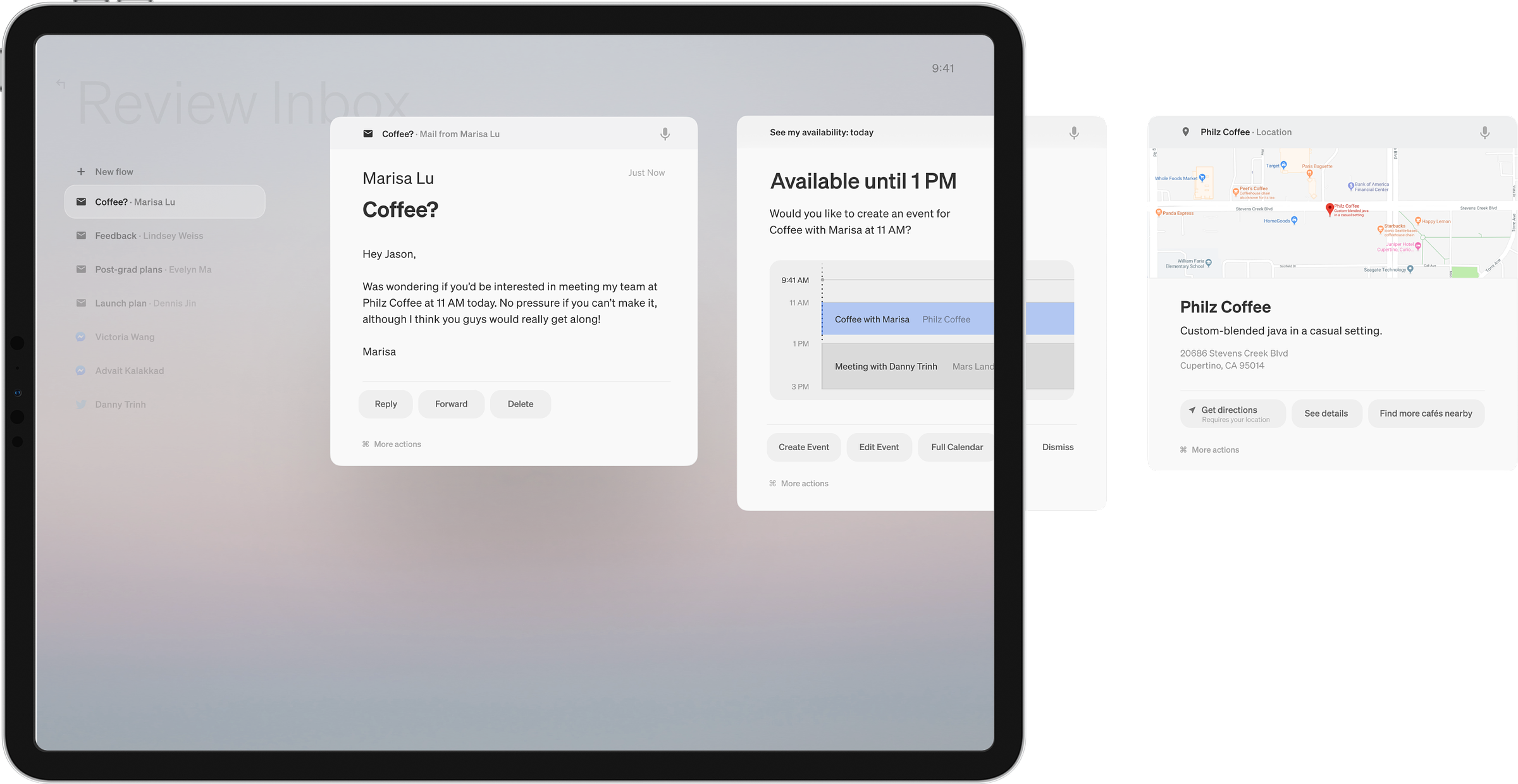

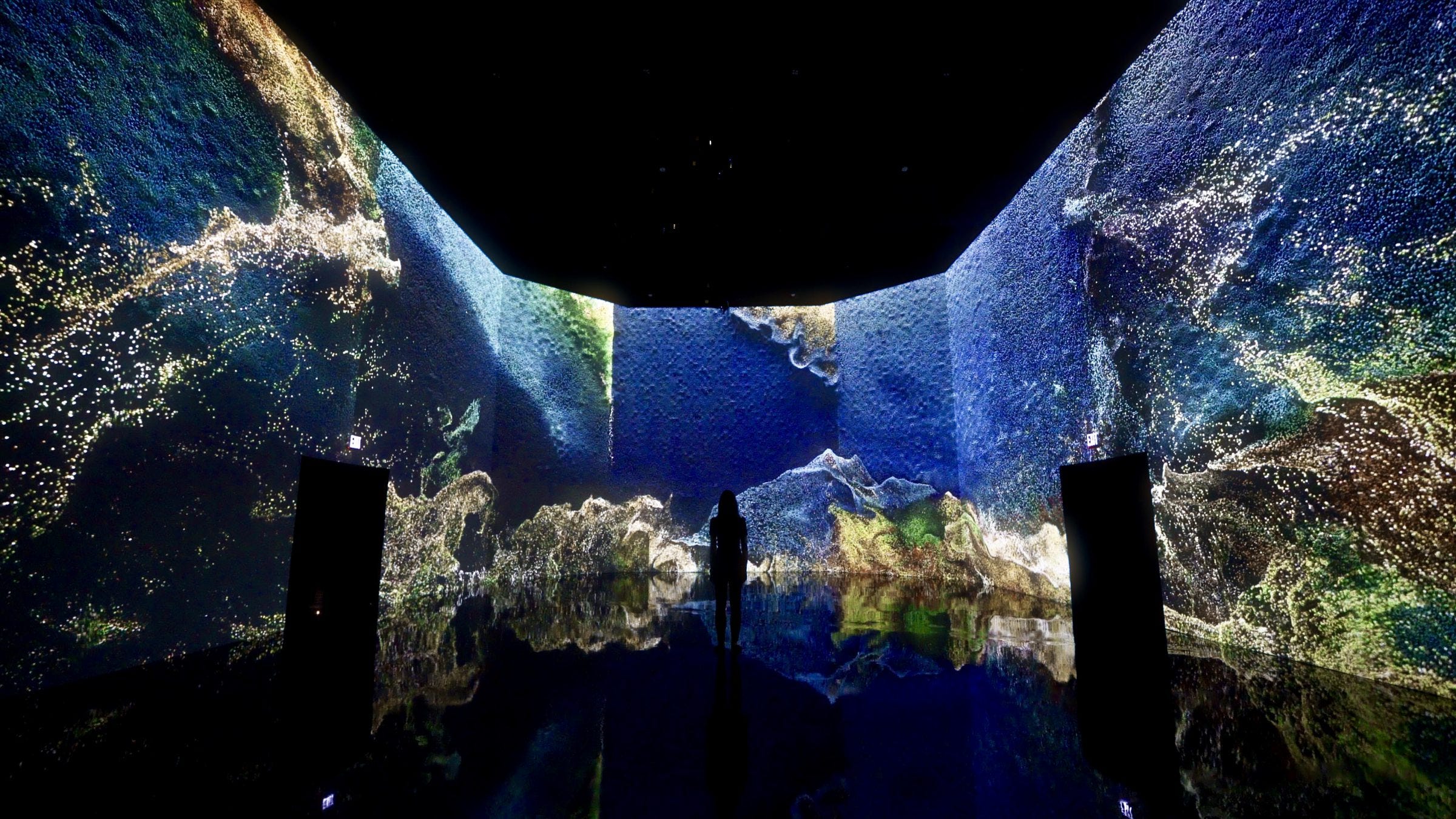

In this new era, interface and intelligence are intertwined. As I wrote in Decoding the Future, the interface of the future is ambient, ephemeral, and embedded — not something you use, but something you live with. These systems listen, learn, and adapt in real-time. They don’t wait for static requirements or perfectly groomed backlogs. They evolve through behavior, context, and nuance.

Designers are uniquely equipped to lead in this terrain, if we choose to.

This is no longer about crafting screens. It’s about defining system behaviors, setting ethical boundaries, anticipating unintended consequences, and shaping the architecture of interaction itself. What the system does, how it learns, when it shows up — those are design decisions. Strategic ones.

So, if design isn’t leading that conversation, who is?

Design Is Now Directional

AI-native products don’t operate on a fixed set of flows. They generate outcomes through interaction. They learn through behavior. They personalize at the edge.

That changes the role of design entirely.

Design is no longer the final wrapper on someone else’s thinking. It’s not the last mile. It’s the system’s behavior, the invisible architecture that makes intelligence useful, usable, and trusted.

Design leaders need to own that. Not wait for a spec. Not polish the edges. But step into the center of the system and decide how it works, how it feels, and what it enables.

Product Leadership Is Evolving — So Should We

AI-native product teams aren’t waiting for specs. They’re shaping problems with LLMs in the loop. They’re iterating live. They’re integrating intelligence from day one. In these organizations, the product lifecycle isn’t linear; it’s generative. And what’s needed most is a vision that can make sense of all that complexity.

Not product managers in backlog triage.

Not engineers in optimization mode.

Not designers stuck refining Figma files.

What’s needed is a strategic voice that can see the whole system, architect end-to-end coherence, and hold the line on what great looks like in a world that’s changing in real-time.

Design leaders, this is our moment — if we show up differently.

From Execution To Direction

In an AI-native world, execution is expected. The real value of design leadership is in shaping where we’re headed — not just how it looks.

That means seeing the opportunity before the brief exists.

Designing systems, not just surfaces.

Creating coherence, not just polish.

Leaning into ambiguity instead of waiting for certainty.

And influencing roadmaps instead of reacting to them.

Design has to operate at the level of product and business strategy, or risk being optimized out of relevance.

Many design teams are still working like it’s 2015 — refining handoffs, optimizing after decisions have been made, asking for a seat at the table. But AI-native products don’t wait. They learn in real time. They adapt to signals. And they require upstream, systems-level thinking from the start.

Leading the product doesn’t require a title, it requires timing, taste, and the courage to go first.

Strategic design leadership isn’t about being invited in.

It’s about showing up early with judgment, vision, and a point of view, and shaping the conversation before it starts.

This is the shift:

From execution to direction.

From refinement to authorship.

From influence to impact.

The Interface Is The System

In AI-native environments, we don’t just design what people see. We design how intelligence behaves.

How does the system initiate a conversation?

How does it build trust?

When does it show restraint?

These are no longer edge cases. They are product-defining decisions.

The rise of multimodal, context-aware interfaces, from projection mapping to voice, gesture, and ambient inputs, means that the old rules no longer apply. There are no wireframes for this. No playbooks. It’s live, learning, and improvisational.

Designers who stay in refinement mode will be outpaced.

Designers who can shape behavior, pattern interactions, and set ethical boundaries, are the ones defining what’s next.

Lead From The Future

The most powerful design leaders I know aren’t waiting for permission. They’re out ahead, translating the shifts in human behavior, technology, and business models into bold experience bets.

They bring coherence when others bring chaos.

They create space for differentiation, not just delivery.

They make intelligence feel intuitive, not invisible.

This is the future of design leadership:

Cross-functional. Systems-minded. Market-aware.

Equally fluent in customer psychology, technical possibility, and product tradeoffs.

Not theorizing from the sidelines — but shaping from the center.

Lead The Conversation — Before It Starts

The most effective design leaders in this era aren’t waiting to be invited. They’re setting the agenda.

They translate shifts in behavior, market dynamics, and technology into bold product narratives. They build prototypes that provoke decisions. They ask sharper questions, surface blind spots, and bring system-level thinking that reframes the path forward.

They don’t wait for consensus, they create clarity.

This isn’t about influence theater. It’s authorship.

Design isn’t a partner in execution. It’s a strategic co-author of the product.

The future doesn’t need more decks about the value of design.

It needs design leaders who shape how teams think, how roadmaps form, and how intelligence behaves.

When design shows up early, with coherence, conviction, and vision, the rest of the system moves faster and smarter.

This Is the Mandate

Design leadership in an AI-native world is not a support function.

It’s not a craft silo.

And it’s not a team waiting for prioritization.

It’s a strategic function — driving vision, creating coherence, and accelerating outcomes.

If you’re leading design today, you’re being called into something more.

Not just better polish. Not just closer alignment.

But real authorship. Real influence. Real strategy.

Not every team will invite you in.

Not every partner will understand it.

But the ones who do? They’ll move faster, with more clarity and more ambition — because you didn’t wait.

So don’t wait for permission.

Don’t wait for the brief.

Don’t wait for the roadmap.

Don’t wait to be ready.

Lead from the middle.

Lead from the future.

But whatever you do — don’t wait.

References and inspiration:

- The Role of Human-Centered Leadership in the Age of AI

- Michael Riddering on AI-Native Product Teams

- The Future of Design Leadership in the Era of AI

- AI Native Product Teams: How They Will Think, Work, and Build Differently

- Reframing Design Leadership in the Age of Generative AI: Let’s Get Radical

- DesignGPT: Multi-Agent Collaboration in Design

- Microsoft’s design chief on human creation in the AI era

- Decoding The Future: The Evolution Of Intelligent Interfaces

- The Evolving Role of Designers in the AI Era: A Paradigm Shift

- Ross Lovegrove on working with AI: ‘The potential is, I think, utopian’

- Adobe Design Talks: Generative AI needs design leadership